In the

previous post in this series, we talked of the of native database usage versus

Amazon RDS. We established some reasons as to why you could choose to go for Amazon RDS. In this post we will see what Amazon provides in terms of disaster recovery(DR) and scalability. Amazon RDS makes it so easy to set up both of these that you just click on a couple of things here and there and you are done. Lets look at each of these individually.

Disaster recovery is when you lose your data in the event of a disaster and want to have an option to fall back on. To achieve this you keep a copy of the data you have, being updated in real time along with the original copy. I recently stumbled across a senior project management in a conference who told me:

Chances of losing data in cloud is almost zero. We don't need disaster recovery at all. We have to do it only because our customers want to hear we have DR in place.

Living in the information age and a background in database replication that was hard for me to digest. Lets bank on the WhatsApp acquisition of Facebook. There are multiple interpretations of why Facebook paid the whooping amount and to me the most compelling one is the user base (aka the logical asset) of WhatsApp as a platform. That is how critical data can be. In the age of personalization, data in my opinion is soon going to be the single most precious thing to own. Needless to say being paranoid about your data is the way to go. Make sure your data is safe at any cost and that is where disaster recovery kicks in.

Getting back to the start up use case, disaster recovery is something that needs careful implementation. Automatic failover is ideal but there aren't many database providers offering automatic failover with zero downtime, and neither does Amazon offer zero downtime. Assuming you are a startup and your aim at the moment is disaster recovery, not necessarily with zero downtime, you can again fallback on Amazon's

multi-AZ configuration.

Multi-AZ configuration is an architecture where you have a redundant copy of your data being replicated synchronously. When I say synchronously, do realize that in theory this should impact performance. For all practical purposes, the impact is negligible and I would expect this to be something you can simply ignore. Setting up multi-AZ configuration is super quick with Amazon RDS. A couple of clicks on their

UI and you are done. A word of caution again from the start-up perspective is that

multi-AZ is twice as costly as a single server deployment. Thats the only flip side of having a multi-AZ deployment as I see it.

Lets now turn to the second subtopic-

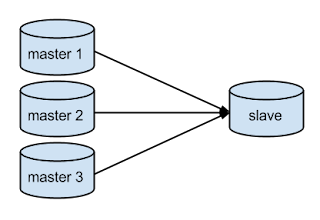

scalability. The most obvious solution to scalability is adding a server (in the technical lingo, slave) that replicates asynchronously from your main server (master). In my experience, I generally see people worrying too much about asynchronous nature of replication fearing data inconsistency. Think hard about your use cases- asynchronous could just be enough. And if it is, Amazon RDS has again something called

read-replicas to cater to this. So you will have an architecture like this:

The source here is the multi-AZ deployment that we discussed earlier and the sinks are the slave servers. Once you have this set-up you can scale on the go. Whenever you see the bottleneck on database end, buy one more Amazon RDS instance and attach it as a read replica. More the scalability needed, more the number of slaves- simple enough! Of course this is not the ultimate solution forever because managing tons of slaves isn't the easiest job out there. But as a startup if you have hit this problem you are really successful and should now be able to afford some DBAs to advice you anyway. We will look at the problems on this end and solutions to them in a separate post. For now its time to look back and pat yourself on the back for being quite successful as a start-up- successful enough to have so much data. Keep your data safe and have fun with it!

I Am Malala: The Girl Who Stood Up for Education and Was Shot by the Taliban by Malala Yousafzai

I Am Malala: The Girl Who Stood Up for Education and Was Shot by the Taliban by Malala Yousafzai